In the third article of this EDA Applications and Benefits in Smart Manufacturing series, we highlighted the first of a series of manufacturing applications that leverage the capabilities of the EDA / Interface A suite of standards in leading semiconductor manufacturers. In this fourth article, we’ll highlight the application that has been the principal driver for the adoption of EDA across the industry thus far, namely Fault Detection and Classification (FDC).

Problem Statement

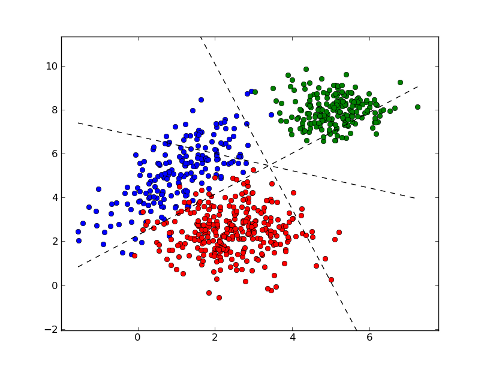

The problem that FDC addresses is the prevention of scrap that may result from processing material by an equipment that has drifted out of its acceptable operating window for whatever reason. The prevalent technique used by today’s leading FDC systems is to develop “reduced dimension” statistical fault models for the various production operating points based on training sets of “good” and “bad” runs. These models are then evaluated in real time with key parameters (usually trace data) collected from the equipment during processing to detect process deviation and predict impending tool failure. In the most advanced fabs, the FDC software is deeply integrated with the systems that manage process flow, and can even interdict equipment operation in mid-run to prevent/reduce scrap production.

Of course, the challenge with this type of algorithm is developing models that are “tight” enough to catch all sources of potential faults (i.e., eliminating false negatives) while leaving enough wiggle room to minimize the number of false positives (also known as false alarms, or crying “Wolf!”). This in turn requires high quality data from the equipment, and lots of process engineering and statistical analysis expertise to develop and update the fault models for the range of production cases that must be handled. High-mix foundry environments exacerbate this situation.

Solution Components

The core of modern FDC systems is a robust multivariate statistics analysis toolbox, capable of handling large amounts of time series data. By “large,” we mean both the number of distinct equipment parameters and the number of samples for each parameter. These software tools collapse potentially hundreds of parameters into a small set of “principal components” that can be calculated on-the-fly using a limited set (say, 20-30) of equipment parameters. Some number of these principal components in aggregate represent the actual state of the process accurately enough to detect deviations from the norm, and since they can be realistically calculated in real time, the application serves as an on-line equipment health monitor.

The other major solution component for a production FDC system is a fault model library management capability that can handle large numbers of models. This is necessary because the multivariate approach includes little or no awareness of the physical meaning of the principal components (i.e., they are not “first principles” based), so different operating points for the equipment must have their own sets of fault models. The proper models for a given operating point are selected by matching the values of the “context parameters” for a specific run to those used to store the models. Even if some models can be shared across a range of operating points, the number of distinct models for a foundry megafab will still number in the thousands.

The other major solution component for a production FDC system is a fault model library management capability that can handle large numbers of models. This is necessary because the multivariate approach includes little or no awareness of the physical meaning of the principal components (i.e., they are not “first principles” based), so different operating points for the equipment must have their own sets of fault models. The proper models for a given operating point are selected by matching the values of the “context parameters” for a specific run to those used to store the models. Even if some models can be shared across a range of operating points, the number of distinct models for a foundry megafab will still number in the thousands.

EDA (Equipment Data Acquisition) Standards Leverage

In an advanced fab, there is a spectrum of data collection alternative available for a given application, from basic lot-level summary information to detailed real-time data that can used at the substrate level or even on a die/site basis. For FDC, this spectrum of possibilities is shown in the table below.

|

SEMI Standard Level |

Functionality |

Benefit |

|

GEM/GEM300 |

Fault models difficult to change after initial development if data collection requirements change |

Baseline |

|

EDA Freeze I (1105) |

Easy to change equipment data collection plans as fault models evolve and require new data; Model development environment can be separate from production system |

Engineering labor reduction; improved fault models and lower false alarm rate |

|

EDA Freeze II (0710) |

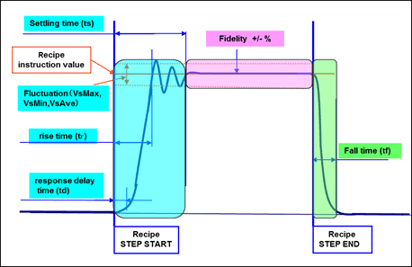

Use conditional triggers to precisely “frame” trace data while reducing overall data collection needs; Incorporate sub-fab component/subsystem data into fault models |

Even better fault models; reduced MTTD (mean time to detect) of fault or process excursion; little or no data post-processing required |

|

EDA Common Metadata (E164) |

Include standard recipe step-level transition events for highly targeted trace data collection; Automate initial equipment characterization process by using metadata model to generate required data collection plans |

Faster tool characterization and fault model development time |

|

Factory-Specific |

Incorporate previously unavailable equipment signals in fault models; Update data collection plans and fault models automatically after process and recipe changes; Include recipe setpoints in the equipment metadata models |

TBD (Not yet applicable) |

The left column refers to the level of SEMI standards used to provide the necessary equipment data. The “Functionality” column describes how that data is used in an FDC context, and the “Benefit” column highlights the potential impact these functions can have.

Let’s say that a fab implemented the capability described on rows 3 and 4 of the table (EDA Freeze II (0710) and E164-compliant EDA Common Metadata). In this case, the process equipment will be able to provide detailed process parameters at recipe step-specific sampling rates sufficient to evaluate “feature extraction” algorithms of even the most demanding FDC models…with context data to select the precise set of models for a given process condition. And even though the specific equipment parameters are necessarily process dependent, much of the software that monitors recipe execution events, generates the data collection plans (DCPs) that provide the trace data, and assembles the context data used by the model management library can be truly generic because of the fab-wide consistency of the equipment interfaces dictated by the E164 standards.

Another aspect of the EDA standards that FDC teams can leverage is the system architecture flexibility enabled by the multi-client capability. Even while a piece of equipment is connected to a production data management infrastructure, the process engineers and statisticians who develop and refine the fault models can use an independent data collection system tailored for process behavior analysis, experimentation, and continuous improvement. When the new fault models are ready for production, the production DCPs can be updated with these new requirements.

KPIs Affected

FDC is considered a “mission-critical” application in today’s fabs because of the high cost of unscheduled equipment downtime and the importance of maintaining high product yield. Simply stated, “if FDC is down, the tool is down,” which means that the real-time data collection infrastructure supporting this application is likewise mission critical. As such, improvements in FDC performance can have a major impact on fab performance.

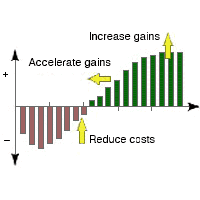

Specifically, FDC directly affects the process yield and scrap rate KPIs through higher fault detection sensitivity, and it affects equipment availability and related KPIs by reducing the number of false alarms that often require equipment to be taken out of production.

So what?

A wise colleague advised me early in my career to always have an answer for this question at the end of every presentation, article, or conversation. To answer this question in financial terms for this posting, let’s consider the cost of FDC false alarms for a production 300mm fab.

Assuming that

- an hour of tool time is worth US$2200, a qual wafer costs $250, and an hour of engineering/technician time costs $150, and

- it takes 5 hours of tool time, 2 hours of engineering time, and 6 qual wafers to resolve a false alarm, then

each false alarm costs the company almost $12K. For a fab with 2000 pieces of equipment and an average false alarm rate of 2 per tool per year, that comes to an annual cost of almost $50M! A 50% reduction in the false alarm rate (which is not unreasonable) nets $25M of savings per year.

If this sounds like “real money” to you, give us a call. We can help you understand how to get on the Smart Manufacturing path with the kind of standards-based data collection infrastructure that is needed to support the latest generation of FDC systems and beyond.

To Learn more about the EDA/Interface A Standard for automation requirements, download the EDA/Interface A white paper today.